EDITOR’S NOTE: Because enterprise learning involves multiple disciplines and perspectives, we regularly invite experts from other organizations to share their insights. Today, Intellum Product Marketing Director, Dr. Anderson Campbell, outlines a path to AI maturity for learning and development.

AI in L&D: From Promise to Reality

When Forrester surveyed 300 customer education leaders early in 2024, the signal was unmistakable. More than 99% of respondents said they were planning to leverage AI in L&D within 18 months.

Now, nearly two years later, learning teams no longer consider artificial intelligence a future objective. It is already here, reshaping expectations and redefining what’s possible in corporate learning.

Sounds like a success. Yet, many organizations are still using AI in the shallow end of the pool. They’re focused on improving efficiency by doing the same things faster. We call these activities “microefficiencies.”

The Trend Line: Heading Upstream

Other industry observers confirm this emphasis on operational speed:

- Early this year, Brandon Hall Group said 89% of businesses expected AI to transform the learning function in 2025. Yet, AI use often remains limited to basic content creation and recommendations.

- Similarly, Fosway noted that economic pressure is driving most organizations to focus on improving learning speed (64%).

However, more recent reports indicate a pivotal change:

- According to Synthesia, the value of AI in L&D is shifting from saving time (88%) to achieving clearer business impact (55%).

- Donald H. Taylor and Egle Vinauskaite concur. Their annual trend analysis shows that 54% of organizations now actively use AI, while only 36% are experimenting. Furthermore, the focus is moving upstream, from operational efficiency toward strategic uses that support broader organizational needs. However, a troubling theme has emerged — poor planning is often a barrier to adoption.

So, what can teams do to move from “microefficiencies” to a more mature role for AI in L&D? Let’s take a closer look…

Don’t miss the latest information on enterprise learning technology! Get our weekly email brief. SUBSCRIBE HERE →

Microefficiencies = Easy AI First Wins

When AI enters the L&D workflow, teams naturally gravitate toward small, safe applications, such as:

- Content tagging and categorization to facilitate navigation within large libraries

- Automated quiz generation from existing course content

- Summarizing learner feedback for faster analysis

For example, a customer education manager might use AI to summarize Net Promoter Score statements and cluster relevant themes. Rethinking these tasks reduces manual labor and shaves minutes or hours off of standard turnaround times. It also helps build AI literacy among team members. And because these tasks are low-risk, they’re perfect targets for experimentation.

However, on its own, this adjustment doesn’t improve the learner experience, change customer behavior, or move business metrics.

Certainly, early wins like this are valuable. But they only scratch the surface of AI’s potential. If we stop here, AI remains a novelty rather than a strategic advantage.

The real transformation happens not by accelerating old workflows, but by reimagining them entirely. So, how do we get to the next level, where we can make a deeper impact?

Find out how real-world companies are achieving more with learning systems that create business value. Get inspiration from dozens of success stories in our free LMS Case Study Directory →

6-Phase Framework for Transforming AI in L&D

To take full advantage of AI, learning leaders need a structured approach that aligns technology with strategy. At Intellum, we use a 6-phase framework to guide that journey:

- Identify & Analyze

- Define Objectives

- Design & Architect

- Develop & Curate

- Implement & Deliver

- Evaluate & Iterate

Here’s how each step works:

1. Identify & Analyze

WHY THIS?

To avoid assumptions and minimize missteps, be sure you understand and document current needs.

Start by mapping bottlenecks, repetitive tasks, and drop-off points in existing workflows. For instance: Which tasks drain your team’s time? Where do learners disengage? These friction points reveal where AI can pack the biggest punch.

TRY THIS:

Inventory the 10 most time-consuming build tasks (such as drafting knowledge checks or tagging media). In parallel, list the 10 moments in the learner journey that cause the most friction (such as a confusing flow or a specific lab experience). These lists become your AI opportunity map.

AN EXAMPLE:

Here’s how one global tech brand recognized the value of this early diagnostic step. Before implementing new solutions, the team analyzed customer onboarding and training data to pinpoint where people were getting stuck or generating high-volume support tickets — especially during self-installation and service activation flows.

This helped them address high-priority moments first, rather than spreading their attention and effort across dozens of potential improvements. By identifying critical points of friction upfront, they followed this universal technology rule: “Don’t automate the wrong things faster.”

2. Define Objectives

WHY THIS?

Current data tells us that most AI efforts aren’t producing a significant impact. But do you know what metric you’re trying to move?

Too often, we apply “AI for AI’s sake.” Instead, set measurable goals tied to learner outcomes and business metrics. For instance, choose objectives like these:

- Reduce average customer onboarding time by 20%

- Increase certification completion rates by 15% among top-tier channel partners

- Improve knowledge retention scores among training program participants by 10%

TRY THIS:

Describe each AI initiative as a hypothesis, with specific, relevant variables you can test.

AN EXAMPLE:

An actionable hypothesis looks like this: “If we deploy AI-generated practice scenarios tailored to each participant’s role and region, then completion rates for Module 2 will increase by 8–10% within one quarter.”

Celebrate the best in learning tech innovation! See our latest annual LMS Award recipients, featuring the top 10 solutions in 6 categories. See all the winners →

3. Design & Architect

WHY THIS?

Rather than replacing instructional design, AI should augment it. This means it’s important to keep learning science front and center: clear outcomes, meaningful practice, timely feedback, and perceived relevance.

TRY THIS:

Implement governance guardrails. For instance:

- Ground generative AI elements with trusted content (docs, release notes, standard operating procedures)

- Track which assets are created by AI, and which are developed or edited by humans

- Require human review for compliance and safety-critical materials

AN EXAMPLE:

Intellum took this approach when building our AI-powered Creator Agent tool. Instead of teaching the system to generate content that “sounded good,” our team trained it to think like an instructional designer.

We brought in learning science and ID experts to define quality. Then we built detailed rubrics to evaluate early outputs, and we iterated on system prompts and logic until the AI reliably demonstrated understanding of how learning objectives, content, and assessments connect.

During testing, learning leaders found that Creator Agent’s outputs — especially learning objectives and assessment items — were consistently clearer, tighter, and more instructionally sound than human-generated versions used as benchmarks.

4. Develop & Curate

WHY THIS?

Use AI to produce outlines, item banks, scenario stems, rubrics, and job-task checklists. Then review everything for accuracy, tone, and inclusivity. AI is great for speed, but humans in the loop ensure better quality.

TRY THIS:

Develop a quality checklist to evaluate and validate all AI-assisted content.

EXAMPLE:

Effective AI quality checks include dimensions like these:

Accuracy: Verified against the source of truth

Clarity: Plain language, task-focused

Inclusivity: Names, examples, and contexts that reflect your audience

Feedback: Actionable, specific, and aligned to rubric criteria

Which learning system is best for you? Check the newest RightFit Solution Grid, based on our team’s rigorous independent research. GET YOUR FREE COPY →

5. Implement & Deliver

WHY THIS?

Manage risk by running a pilot on a single audience or workflow for 30–60 days.

TRY THIS:

Instrument everything. In other words, be sure to quantify engagement levels, attempt data, time-on-task, reattempt rate, and help-desk deflection.

AN EXAMPLE:

In a pilot of our AI assessment generator, Accenture accepted about 80% of AI-generated questions as-is. This reduced assessment creation time by an estimated 270 hours a year. These two metrics — acceptance rate and hours saved — became the go/no-go signal to scale the workflow more broadly.

6. Evaluate & Iterate

WHY THIS?

Even with thoughtful input and preparation, it’s unrealistic to assume you’ll hit the mark on the first try. And a one-and-done mentality is unlikely to achieve the kind of results you want. But you never know unless you examine outcomes and commit to continuous improvement.

TRY THIS:

Measure impact against your original objectives. Did AI shorten development time? Did it improve completion rates? Did support ticket volumes decrease? How much? Did unexpected outcomes emerge from this process?

Use these quantified insights to isolate issues, refine prompts, adjust workflows, and expand successful applications.

AN EXAMPLE:

Business process outsourcing firm, TTEC, relies on learning science to drive ongoing improvement of its Learning Wizards Suite. This performance support solution is built on an ecosystem of chatbots that automate learning needs assessment and design workflows. With continuous updates based on more than 60 prompts, along with feedback from design and delivery teams, TTEC is evolving this system into a fully agentic AI solution.

Don’t miss the latest compliance training trends and other enterprise learning topics! Get our weekly email brief. SUBSCRIBE HERE →

Moving From Faster to Smarter

The leap from microefficiencies to transformation isn’t a one-time event. It’s an ongoing process of rebalancing work, so L&D teams remain focused on high-value learning priorities, such as designing and supporting authentic practice, guiding reflection, and aligning programs with business goals. Meanwhile, AI handles repetitive and scale-intensive implementation processes.

To move up the value chain, keep these 3 horizons in mind:

- ASSIST

Drafts, summaries, tags.

(Benefit = Time savings) - AUGMENT

Personalized practice, adaptive paths, timely nudges, coaching.

(Benefit = Improved learning outcomes) - ARCHITECT

Re-engineer workflows where data, content, and coaching form a cohesive system.

(Benefit = Strategic impact)

With thoughtfully designed pilots, most teams can reach the “Augment” horizon within one quarter. Getting to the “Architect” horizon takes more planning (including data plumbing, governance, and change management). But this is where you’ll find a much more durable advantage.

As a growing number of learning teams are discovering, going beyond microefficiencies is well worth the effort. The sooner you step up on the AI maturity ladder, the better your learning program outcomes will be. And with the right moves, organizational performance will follow.

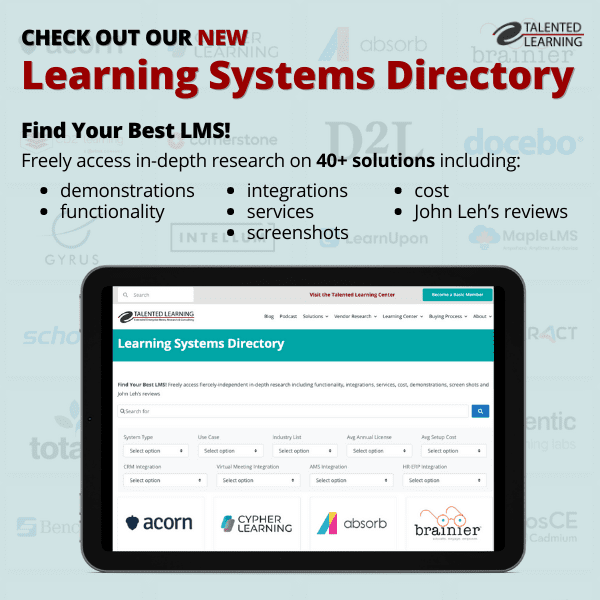

Need an LMS That Supports Strategic AI Goals? Let’s Talk…

For independent advice you can trust, schedule a free 30-minute phone consult with Talented Learning Lead Analyst, John Leh…

*NOTE TO SALESPEOPLE: Want to sell us something? Please contact us via standard channels. Thanks!

Share This Post

Related Posts

How to Find the Best LMS for Your Needs: Customer Ed Nugget 31

When it's time for a new or replacement platform for customer education, how can you find the best LMS for your business? Find out on this Customer Ed Nugget episode...

AI in Corporate Training: What to Expect From Your LMS

AI is making a huge impact on enterprise systems, including LMS platforms. But what does that mean for the future of corporate training? An LMS innovator digs deeper...

Need to Build Workforce AI Skills? Try These Go-To Training Methods

Organizations everywhere are scrambling to develop key workforce AI skills. But which training methods actually work? 13 business leaders share their best advice here...

How Do You Drive AI Adoption? 12 Proven Strategies

Which learning strategies are improving workforce AI adoption? Find how a dozen business leaders are making a positive impact

16 Enterprise LMS Trends Shaping 2026: An Analyst’s View

Learning tech innovation is all around. But which enterprise LMS trends will matter most this year and beyond? See what independent analyst John Leh says...

Top Podcasts and Posts of 2025 – Talented Learning’s Greatest Hits

2025 was our biggest year as an independent source of enterprise learning information. Which were our top podcasts and posts? Take a quick look back with us...

5 Must-Have AI Capabilities for Customer Education: Customer Ed Nugget 30

AI is everywhere. But if you provide customer education, do you know which AI capabilities matter most right now? Find out on this mini-podcast...

Using AI in L&D: How to Chart a Course for Transformation

Many learning teams are using AI for operational efficiency. But are you ready to go to a whole new level with AI in L&D? This guide shows the way...

Say Yes to Skills Disruption: A Guide to Learning Agility

AI and other tech advances are driving demand for many new workforce skills. How can L&D help employers keep up? Check this guide to agile skill development...

FOLLOW US ON SOCIAL